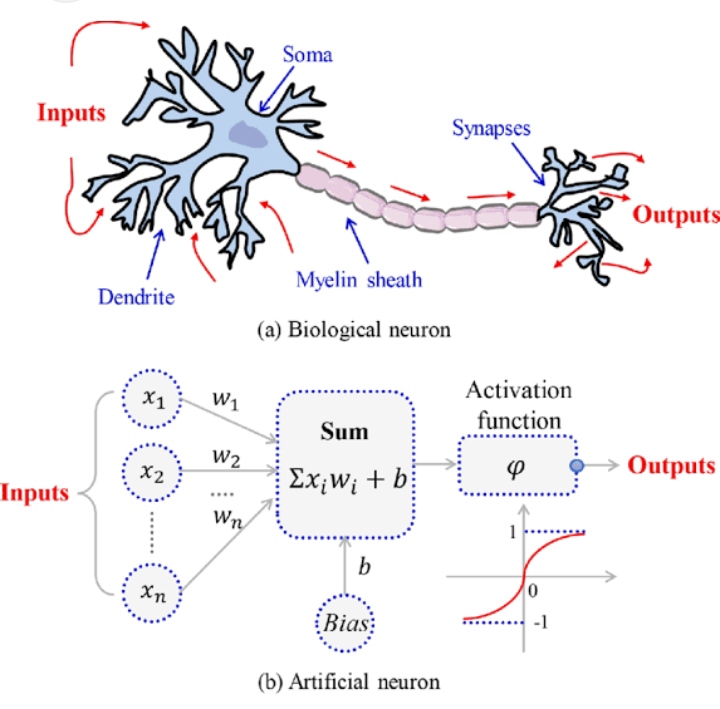

What is the meaning of neuron?

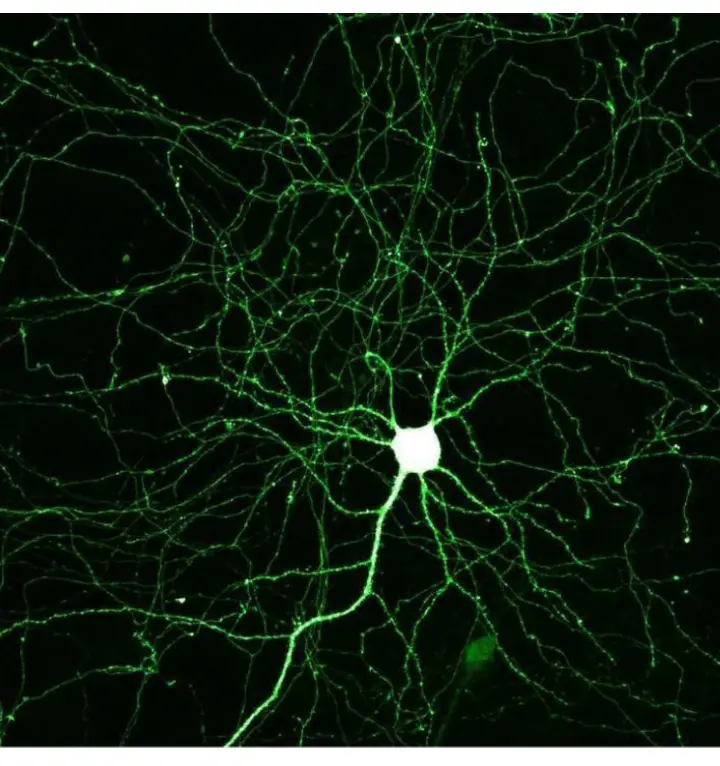

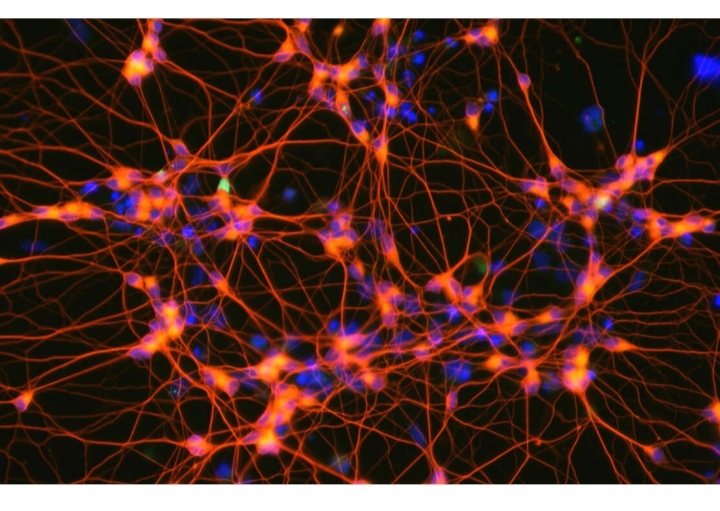

The basic building blocks of the brain and nervous system are neurons (also known as neurones or nerve cells). Neurons are the cells that receive sensory information from the outside world, give motor commands to our muscles, and transform and relay electrical signals at each stage along the way.

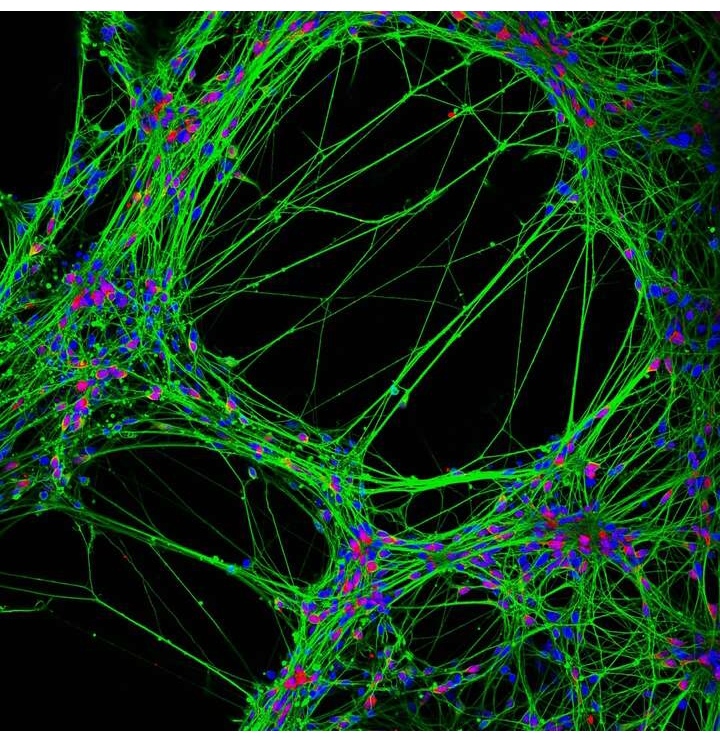

Beyond that, our interactions with them shape who we are as people. Despite this, our approximately 100 billion neurons interact intimately with other cell types often referred to as glia (although it is unknown if glia outnumbers neurons).

Neurogenesis, which can occur in adults, is the process of the brain producing new neurons.

Like the human brain, a novel artificial neuron operates using ions.

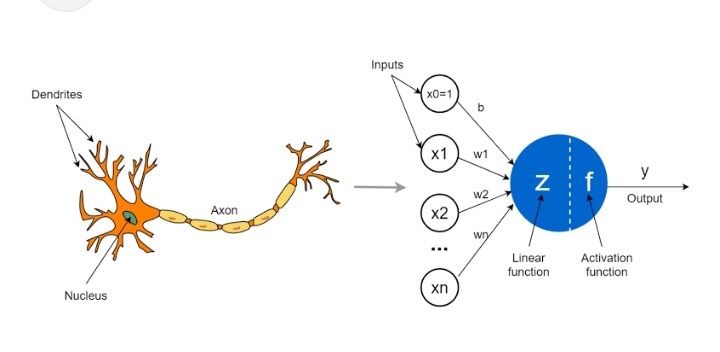

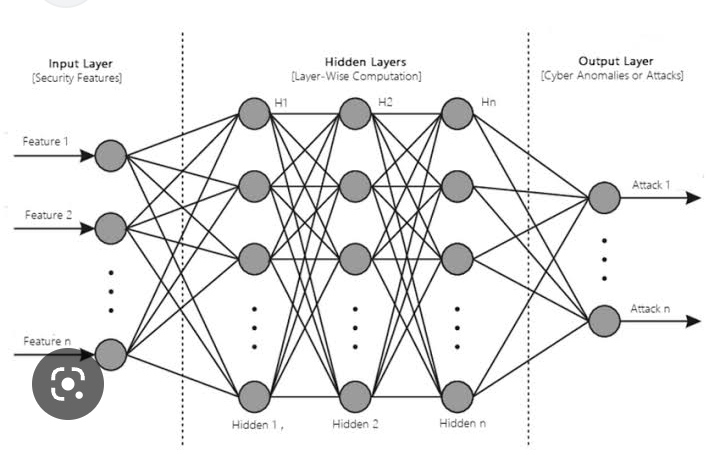

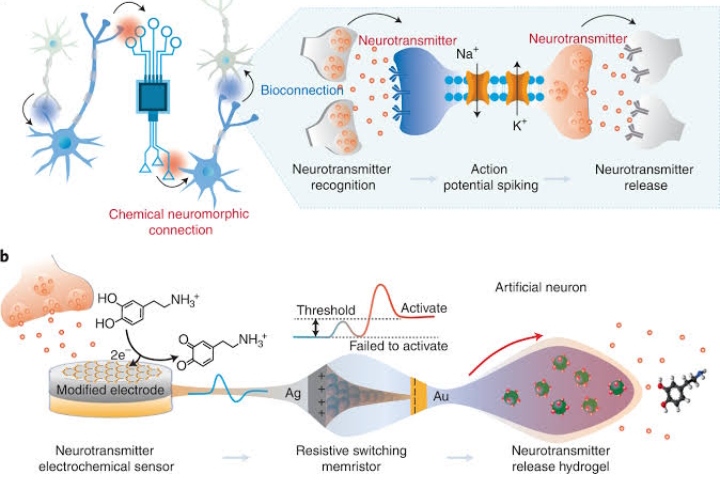

An artificial neuron is a mathematical function designed to model biological neurons or a neural network. An artificial neural network’s basic building blocks are artificial neurons. The artificial neuron takes one or more inputs representing excitatory and inhibitory postsynaptic potentials at neural dendrites. It adds them to form an output known as activation and represents a neuron’s action potential that is conveyed along its axon. The sum is run via a non-linear function known as an activation or transfer function, with each input typically weighted individually.

Research into brain-inspired electronics is very active. Researchers from the CNRS and Ecole Normale Supérieure – PSL have proposed a method for creating artificial neurons that use ions as the information-carrying nerve cells. They describe that devices built of a single sheet of water-moving ions within graphene nanoslits have the same transmission capacity as a neuron in a paper published in Science on August 6th, 2021.

The human brain can do numerous complicated activities with an energy need of two bananas daily. It is high energy efficiency, mainly dependent on the neuron, which is its fundamental building block. Ion channels are membrane-bound nanometric holes that open and close in response to external inputs. Action potentials, which are electrical impulses that enable communication between neurons, are released as a result of the ion fluxes that result.

All of these functions can be performed by artificial intelligence, but only at the cost of tens of thousands of times the energy used by the human brain. Creating electronic systems that are as energy-efficient as the human brain, for instance, by using ions rather than electrons to transmit information, is the current scientific challenge.

For this, nanofluidics provides numerous angles, studying how fluids behave in channels less than 100 nanometers wide. An artificial neuron prototype made of incredibly thin graphene slits holding a single layer of water molecules may now be built, according to a new study from the ENS Laboratoire de Physique (CNRS/ENS-PSL/SorbonneUniversité/Université de Paris).

The researchers have demonstrated that when an electric field is present, the ions in this layer of water group together to form elongated clusters that exhibit the memristor effect, which allows these clusters to retain some of the stimuli they have previously received.

The graphene slits replicate the ion channels, clusters, and ion fluxes to continue the comparison with the brain. Additionally, researchers have demonstrated how to combine these clusters to replicate the physical process of action potential emission and, by extension, information transmission.

The French team continues their practical work on this theoretical project in cooperation with researchers from the University of Manchester (UK). The current objective is to experimentally demonstrate that such devices can implement basic learning algorithms that could provide the framework for future electronic memories.

Naturally, efforts to duplicate brain activity in animals have the potential to be used for improving neurological processes. For instance, Neuralink by Elon Musk promises to implant computer chips in the brain and usher in a new era of “super-human cognition” when the ethereal computational power of machine-learning analytics is united with the (as of now) unmatched creative intuition of the human mind.

A brief history of artificial neurons.

The Threshold Logic Unit (TLU), also known as the Linear Threshold Unit, was the first artificial neuron and was first put forth by Warren McCulloch and Walter Pitts in 1943.

The model was intended to serve as a computational representation of the brain’s “nerve net.” [20] It used a threshold as a transfer function, similar to the Heaviside step function. At first, just a straightforward model with binary inputs and outputs, some limitations on the range of feasible weights, and a more adaptable threshold value were considered.

The fact that one may implement the AND and OR functions and use them in the disjunctive or conjunctive standard form immediately demonstrates the ability of networks of such devices to implement any boolean function. Researchers soon discovered that cyclic networks with neuronal feedback might describe dynamical systems with memory. However, due to their smaller challenge, much of the study focused (and still does) on exclusively feed-forward networks.

A chemically mediated artificial neutron-Image courtesy of Nature.

Frank Rosenblatt’s perceptron was a significant and innovative artificial neural network that used the linear threshold function. This model, applied to machines with adaptive capacities, already considered more variable weight values in the neurons. Bernard Widrow first proposed the representation of the threshold values as a bias term in 1960

When neural network research emerged again in the late 1980s, neurons with more continuous forms began to be considered. Gradient descent and other optimization methods can be used directly for weight modification due to the possibility of differentiating the activation function.

Additionally, broad function approximation models using neural networks have begun to be applied. Although backpropagation, the most well-known training algorithm, has been rediscovered numerous times, Paul Werbos is credited with its initial development.

Disadvantages of artificial neurons.

Significant computational resources are needed

Neural networks are composed of numerous interconnected processing nodes designed after the brain. Each node computes using its weight parameters and backpropagates them to make adjustments. Due to its numerous parameters, ANN also requires larger training datasets.

Numerous data sets are necessary for neural network training.

They cannot extrapolate from the small training data set. Neural network models have the propensity to overfit on smaller datasets. They fail to generalize successfully to new examples because they memorize the training data.

Careful consideration must be given to data preparation for neural network models.

A model may be less effective if the data are not scaled properly. You can utilize standardization or normalizing techniques to get around this problem. Additionally, a neural network may develop unrepresentative patterns of the real world if the input dataset is unbalanced. This can ultimately result in a less accurate model.

Neural network models are challenging to understand.

Neural network models are challenging to understand. They are made up of several processing nodes joined together. Understanding how the node weights produce the projected outcome is difficult. Neural networks might not be ideal if you need to provide model findings that are simple to explain to a non-technical audience.

It can be difficult to optimize neural models for manufacturing.

It can be difficult to optimize neural network models for manufacturing. You can build neural networks quickly with libraries such as Keras. But, once you’ve created the model, you need to think about how to deploy it in production. This can be challenging because neural networks can be computationally intensive.